This feature is incredibly exciting, and it feels like it hasn’t received the attention it deserves amidst all the other recent updates. There’s certainly a lot of innovative updates in the Fabric platform coming every day!

In this post, I’ll guide you on how to automatically export data from your semantic models to Delta tables in OneLake. You only need to set the configuration up once, and Fabric will take care of exporting and updating the data for you—no need for pipelines, dataflows, or scripts. It’s that simple!

Prerequisites

OneLake integration for semantic models is exclusively available with Power BI Premium P and Microsoft Fabric F subscription tiers. This feature isn’t accessible through Power BI Pro, Premium Per User licenses, or Power BI Embedded A/EM plans.

1. Workspace with at least one semantic model in import mode hosted on a Power BI Premium or Fabric capacity.

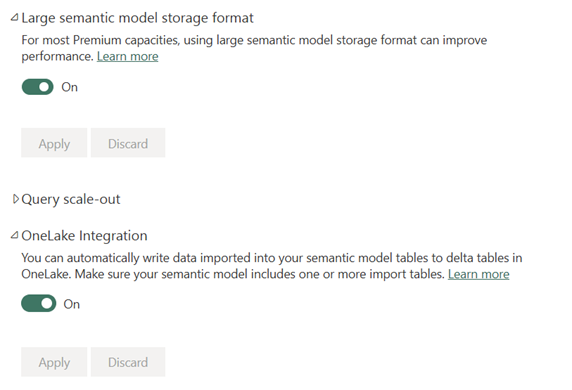

2. Option Large semantic model storage format enabled for the particular model.

Permissions

The user should have a contributor role in order to be able to create shortcuts linking the semantic model delta tables into Lakehouse.

1. Semantic model settings

Go to the settings pane of the semantic model which date you would like to export to OneLake and make sure both options: Large semantic model storage format and OneLake Integration are enabled.

In addition, make sure that the following settings are enabled in the Power BI Admin portal:

1. Semantic models can export data to OneLake: If this option is turned off, users cannot enable OneLake integration for their semantic models, and any models already set up for OneLake integration will stop exporting import tables to OneLake. By default, OneLake integration is enabled for the entire organization.

2. Users can store semantic model tables in OneLake: Allowing specific or all users to configure OneLake integration for their models. If this feature is disabled for a particular user, they will no longer be able to enable OneLake integration. However, any semantic models they’ve already set up for OneLake integration will continue exporting import tables.

Both options are enabled by default and can be managed by global and tenant administrators.

2. Refresh

After the OneLake integration has been enabled, a manual or scheduled refresh is needed to be performed on the semantic model in order for the data to be written to a Delta table in OneLake.

3. Import data into Lakehouse

Next step is to link the exported data into your lakehouse, by creating shortcuts.

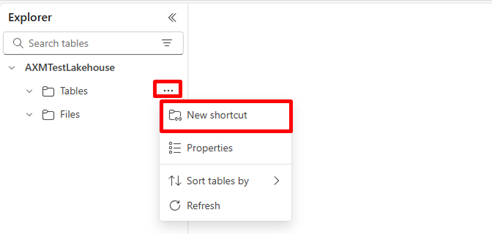

1. Go to your Lakehouse.

2. Under Tables, select the three dots and select New shortcut

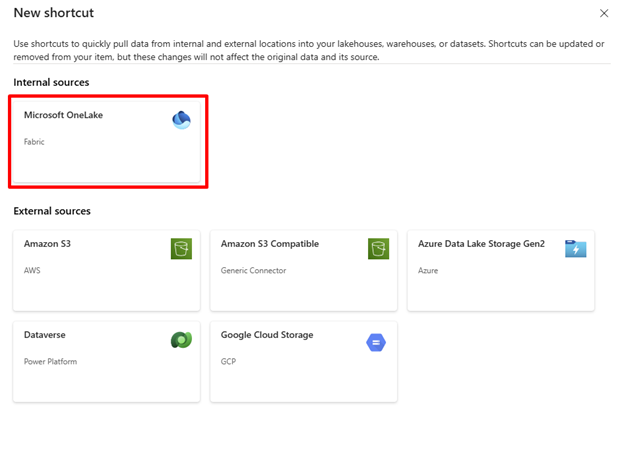

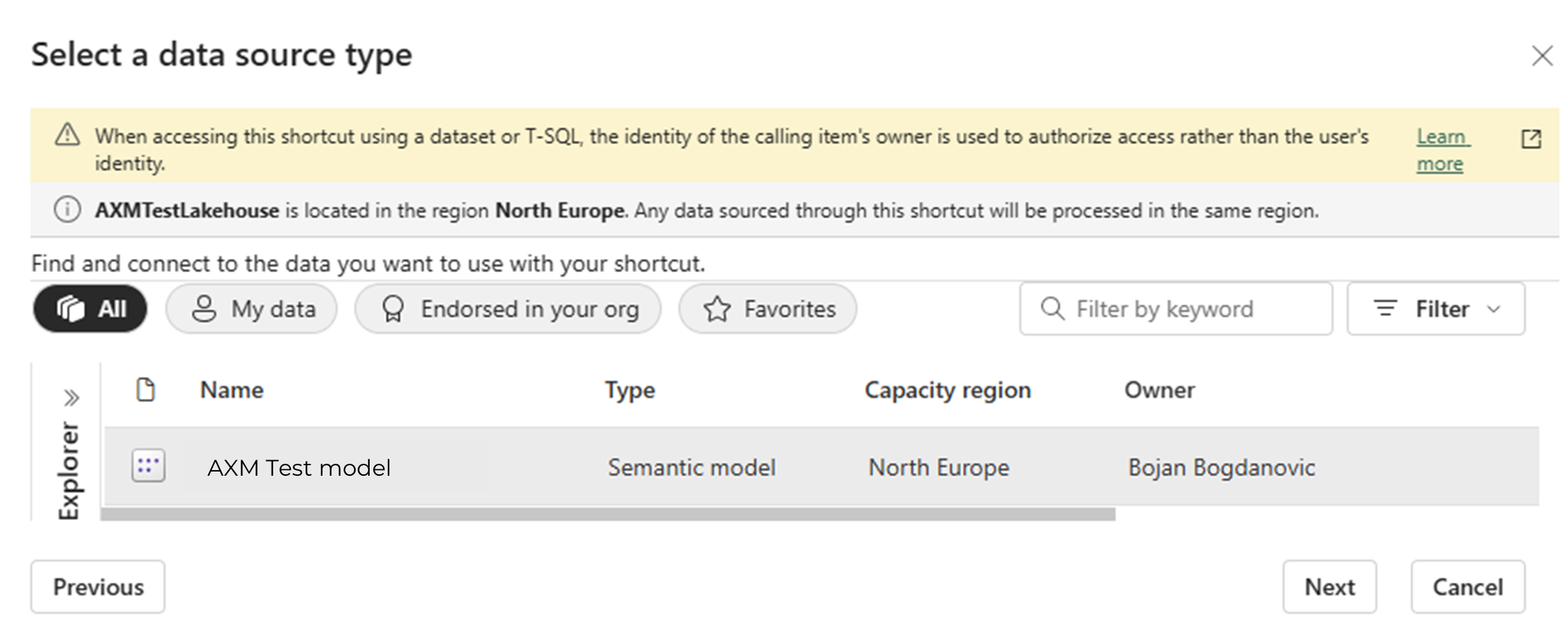

3. In the pop-up window, select Microsoft OneLake as a source.

4. If the refresh of your semantic model was successful and you have proper permissions on it, you should be able to see the semantic model in the list of available data sources to choose from

5. After selecting your semantic model, you can select which tables from it you would like to create a shortcut in your Lakehouse. You can select a specific table or create them all.

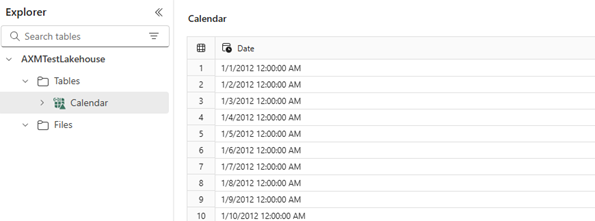

6. Once the creation process is complete, you can see your newly created shortcuts under the Tables section. Now you can do all the necessary operations over them, as they are regular tables in Lakehouse (i.e. querying through PySpark notebook).

7. Upon each model refresh, the latest data will be exported to the OneLake, thus the user will always have the newest data in the Lakehouse.

Limitations

There are certain limitations that should be considered in order to understand whether this functionality fulfills your business requirement and whether you should proceed with its implementation. The most important ones are:

- Measures, DirectQuery tables, hybrid tables, calculation group tables, and system managed aggregation tables can’t be exported to Delta format tables.

- Only a single version of the delta tables is exported and stored on OneLake. Old versions of the delta tables are deleted after a successful export. Meaning that there is no mechanism for storing historical data or versioning. To do this, a workaround can be implemented.